🧠 Neural Networks

Machine Learning Made Simple

3-Minute Complete Guide

🤔 What is a Neural Network?

A brain-inspired algorithm that learns patterns by connecting simple processing units called neurons.

A neural network with input, hidden, and output layers

Think of a neural network like a team of specialists: Imagine you're trying to recognize handwritten numbers. You have a team where each person specializes in detecting specific features - one person looks for curves, another for straight lines, another for loops. They all share their findings, and together they make the final decision. That's exactly how neural networks work!

A single artificial neuron - the building block of neural networks

Why is this revolutionary? Unlike traditional algorithms that follow fixed rules, neural networks learn from examples. Show them thousands of cat photos, and they'll learn to recognize cats in new photos they've never seen. It's like teaching a child - the more examples you show, the better they get at recognizing patterns.

🏗️ Neural Network Structure: The Architecture of Intelligence

The three-layer architecture: Input → Hidden → Output

Input Layer - The Sensory System:

Think of the input layer as your network's eyes and ears. If you're analyzing images, each pixel becomes an input neuron. For text analysis, each word or character gets its own input. It's like having thousands of tiny sensors, each responsible for one piece of information about your data.

Hidden Layers - The Brain's Processing Center:

This is where the magic happens! Hidden layers are like having multiple teams of experts, each building on the work of the previous team. The first hidden layer might detect simple features like edges in images. The second layer combines these edges to recognize shapes. The third layer combines shapes to recognize objects. It's a beautiful hierarchy of learning!

Deep neural network with multiple hidden layers

Output Layer - The Decision Maker:

The output layer gives you the final answer. For image classification, it might have 10 neurons (one for each digit 0-9). For spam detection, just 2 neurons (spam or not spam). Each output neuron represents the network's confidence in that particular answer.

⚡ How Neural Networks Learn: The Training Process

Backpropagation: How neural networks learn from their mistakes

Forward Pass - Making a Prediction:

Imagine you're showing the network a photo of a cat. The image data flows forward through the network like water through pipes. Each neuron processes the information it receives, applies some math, and passes the result to the next layer. Finally, the output layer says "I think this is a cat with 85% confidence."

Backward Pass - Learning from Mistakes:

Here's the brilliant part! If the network was wrong (maybe it said "cat" but it was actually a "dog"), it doesn't just shrug and move on. It traces back through the entire network, asking "Which connections led to this mistake?" Then it adjusts those connections to be a little bit better next time. This process is called backpropagation.

Neural network learning: adjusting weights to reduce errors

Gradient Descent - The Optimization Engine:

Think of learning like rolling a ball down a hill to find the lowest point. The "hill" represents all possible mistakes the network could make, and the "lowest point" represents perfect accuracy. Gradient descent is the algorithm that figures out which direction to roll the ball (adjust the weights) to get closer to that perfect spot.

🚀 Neural Network Applications: Changing the World

Convolutional Neural Networks processing image data

Computer Vision - Teaching Machines to See:

Neural networks have revolutionized how computers understand images. Your smartphone's camera can instantly recognize faces, objects, and even text in photos. Self-driving cars use neural networks to identify pedestrians, traffic signs, and other vehicles in real-time. Medical imaging systems can detect tumors in X-rays and MRIs with accuracy that sometimes exceeds human doctors.

Neural networks recognizing objects in images

Natural Language Processing - Understanding Human Language:

Ever wondered how Google Translate works, or how Siri understands what you're saying? Neural networks! They can translate between languages, generate human-like text, answer questions, and even write code. ChatGPT, which you might have used, is a massive neural network trained on billions of text examples.

Recommendation Systems - Predicting What You'll Love:

Netflix knows what movies you'll enjoy, Spotify creates perfect playlists, and Amazon suggests products you actually want to buy. Neural networks analyze your behavior patterns, compare them with millions of other users, and predict what you'll like next. It's like having a personal assistant who knows your tastes better than you do!

Neural networks powering modern AI applications

🎯 Types of Neural Networks: Specialized Tools for Different Jobs

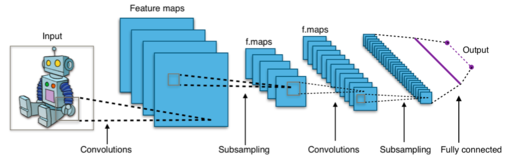

Convolutional Neural Network (CNN) for image processing

Convolutional Neural Networks (CNNs) - The Vision Specialists:

CNNs are like having specialized detectives for images. They use "filters" that scan across images looking for specific patterns - edges, textures, shapes. It's like having a magnifying glass that can detect every important detail in a photo. This makes them perfect for image recognition, medical imaging, and even analyzing satellite photos.

Recurrent Neural Network (RNN) for sequence data

Recurrent Neural Networks (RNNs) - The Memory Masters:

RNNs have memory! Unlike regular neural networks that treat each input independently, RNNs remember what they've seen before. This makes them perfect for understanding sequences - like predicting the next word in a sentence, analyzing stock market trends over time, or understanding speech patterns. They're like having a conversation partner who remembers the entire context of your discussion.

Transformer Networks - The Language Virtuosos:

The newest and most powerful type, Transformers can pay attention to different parts of the input simultaneously. They're behind breakthrough AI systems like GPT and BERT. Think of them as having multiple spotlights that can focus on different parts of a sentence at once, understanding complex relationships between words that are far apart.

Autoencoder: learning to compress and reconstruct data

🔧 Key Concepts: The Building Blocks of Neural Intelligence

Different activation functions and their shapes

Activation Functions - The Decision Makers:

Every neuron needs to decide: "Should I fire or not?" Activation functions make this decision. ReLU (Rectified Linear Unit) is like a simple on/off switch - if the input is positive, pass it through; if negative, output zero. Sigmoid squashes everything between 0 and 1, like a smooth dimmer switch. Each function gives the network different capabilities for learning complex patterns.

Weights and Biases - The Learning Parameters:

Think of weights as the strength of connections between neurons, and biases as each neuron's personal threshold for activation. During training, the network adjusts these millions of tiny parameters to get better at its task. It's like tuning a massive orchestra where each instrument (neuron) needs to play at just the right volume (weight) and timing (bias).

Gradient descent finding the optimal solution

Loss Functions - The Report Card:

Loss functions measure how wrong the network's predictions are. Mean Squared Error is like measuring the average distance between your guesses and the correct answers. Cross-entropy loss is perfect for classification - it heavily penalizes confident wrong answers. The network's goal is always to minimize this loss, getting better with each iteration.

⚡ Neural Networks vs Other Algorithms: When to Choose What

Neural networks compared to other machine learning algorithms

Neural Networks' Superpowers:

Pattern Recognition Excellence: Neural networks excel at finding complex, non-linear patterns that other algorithms miss. They can recognize faces in photos, understand speech, and even generate art. It's like having a pattern-detection superpower that gets stronger with more data and complexity.

Automatic Feature Learning: Unlike traditional algorithms where you need to manually engineer features, neural networks automatically discover what's important. Show them raw pixel data, and they'll learn to detect edges, shapes, and objects on their own. It's like having a student who not only learns the answers but figures out what questions to ask.

Neural networks can model complex relationships between inputs and outputs

Neural Networks' Limitations:

Data Hungry: Neural networks are like talented students who need lots of examples to learn well. While a decision tree might work with hundreds of examples, neural networks often need thousands or millions. For small datasets, simpler algorithms like Random Forest or SVM might be better choices.

Black Box Nature: Neural networks are notoriously difficult to interpret. They can tell you "this is a cat" but can't easily explain why they think so. If you need to understand the reasoning behind decisions (like in medical diagnosis or legal applications), more interpretable algorithms might be preferable.

Computational Requirements: Training neural networks requires significant computational power and time. A simple logistic regression might train in seconds, while a neural network could take hours or days. Consider your computational budget and time constraints.

🎓 Next Steps & Best Practices

🚀 Advanced Techniques:

- Transfer Learning: Use pre-trained models

- Regularization: Dropout, L1/L2 penalties

- Batch Normalization: Stabilize training

- Learning Rate Scheduling: Adaptive learning

- Ensemble Methods: Combine multiple networks

- Hyperparameter Tuning: Optimize architecture

💡 Best Practices:

- Start Simple: Begin with basic architectures

- Normalize Data: Scale inputs to [0,1] or [-1,1]

- Monitor Overfitting: Use validation sets

- Save Checkpoints: Don't lose training progress

- Use GPU: Accelerate training significantly

- Experiment: Try different architectures

💡 Practice Projects:

Build neural networks for these exciting projects:

- 🖼️ Image classifier (cats vs dogs)

- 📝 Text sentiment analysis

- 🎵 Music genre classification

- 📈 Stock price prediction

- 🎮 Game playing AI

- 🗣️ Speech recognition system

⚠️ Important Reminders:

Always remember to:

- Normalize your input data before training

- Split data into train/validation/test sets

- Start with simple architectures and add complexity gradually

- Monitor both training and validation loss

- Save your best models during training

- Use appropriate loss functions for your problem type